A new cultural and political flashpoint has emerged in 2025 as a high-profile federal initiative dubbed “Prevent Woke AI” gains momentum. Backed by a coalition of lawmakers, think tanks, and tech watchdogs, the initiative aims to curb what supporters describe as “left-leaning bias” in artificial intelligence models. But critics warn it could lead to censorship, ideological manipulation, and the politicization of science and technology.

At the core of the controversy lies a fundamental question: Who decides what AI should say? As AI systems—like chatbots, recommendation engines, and search tools—become part of everyday life, the debate over neutrality, values, and representation in their outputs has become impossible to ignore.

🔍 The Initiative’s Origins

The “Prevent Woke AI” campaign was launched in early 2025 by a bipartisan congressional caucus concerned with transparency and viewpoint diversity in machine learning models. The group—comprised largely of conservative and libertarian lawmakers—asserts that many large language models and recommendation systems display “unbalanced perspectives,” particularly on topics like race, gender, climate, and history.

Several high-profile testimonies from whistleblowers and AI engineers have supported these claims. Some allege that certain platforms prioritize progressive viewpoints while suppressing traditional, religious, or nationalist perspectives.

The initiative proposes new regulatory frameworks, transparency audits, and guidelines for AI developers to ensure ideological neutrality in government-funded or widely distributed AI systems.

🧠 How Bias Forms in AI

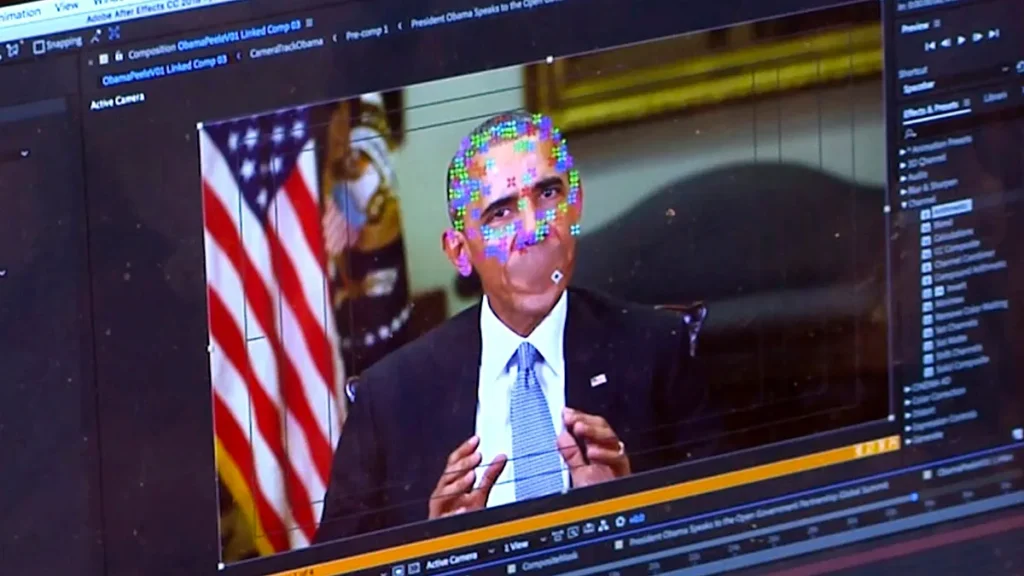

AI bias isn’t new. Most models learn from massive data sets collected from books, news articles, social media, and websites—sources that naturally contain human bias. When left unchecked, these biases can be embedded in AI outputs.

For instance, a chatbot might consistently recommend gendered roles when discussing professions or provide more favorable descriptions of one political ideology over another. AI systems used in hiring, medical triage, or education may also reflect and reinforce systemic biases from their training data.

“Bias isn’t always intentional,” says Dr. Malika Jones, an AI ethics researcher. “It can result from incomplete data, feedback loops, or design decisions made without enough stakeholder input.”

The challenge is not simply removing bias—it’s deciding which biases to remove and who makes that call.

⚖️ Free Speech vs. Responsible AI

At the heart of the “Prevent Woke AI” push is a broader ideological struggle over speech, power, and digital governance.

Supporters argue that current AI platforms, particularly those run by tech giants, lean toward progressive values on issues such as race relations, climate change, immigration, and gender identity. They see the initiative as a corrective measure that brings balance and transparency to AI’s growing influence over public discourse.

“We’re not against AI—we’re against AI being used as a propaganda tool,” said Senator Marcus Deane (R-FL), one of the bill’s lead sponsors. “Americans should be able to ask a question without getting a lecture.”

On the other hand, critics argue the initiative threatens to politicize technology and suppress historically marginalized perspectives. They warn that legislating AI outputs risks undermining free inquiry and scientific integrity.

“This is about power, not fairness,” says Aisha Feldman, Director of Digital Rights at NetEquity. “If we force AI to ‘balance’ facts with opinions, we degrade public trust in knowledge and weaponize neutrality.”

🧩 Tech Industry’s Response

The tech sector has responded with caution. Some AI developers have expressed support for greater transparency, model explainability, and user customization. Companies like OpenMindAI and Dialogis have begun offering filters that allow users to choose between “academic,” “conversational,” and “balanced” modes in AI responses.

However, many leading AI firms have warned against government overreach. They argue that political mandates about model behavior could harm innovation, complicate deployment, and undermine public trust in AI outputs.

Behind the scenes, industry insiders worry about the costs of increased auditing and the legal gray area surrounding compelled speech by algorithms.

“There’s no such thing as a completely neutral AI,” says Lila Chen, Chief Ethics Officer at SynthetIQ. “Any effort to impose neutrality must be open, inclusive, and properly targeted.”

🌐 Broader Implications

The “Prevent Woke AI” debate reflects a larger societal tension around trust in institutions, media, and emerging technology. With AI increasingly shaping how people learn, work, and vote, these systems are no longer seen as mere tools—they’re cultural forces.

Some fear that escalating culture wars over AI could damage public confidence in all forms of digital information. Others hope the controversy forces developers to adopt more open-source practices, user governance models, and diverse stakeholder input.

“This is a wake-up call,” says Dr. Jorge Menendez, a digital policy scholar. “We need to treat AI as part of our civic infrastructure—and that means including everyone in decisions about how it works.”

🚨 What Comes Next?

As of mid-2025, the Prevent Woke AI Act is under congressional review. Key provisions include:

- Requiring AI developers to disclose training data sources for government-funded models

- Mandating ideological audits for consumer-facing platforms with over 50 million users

- Establishing a National AI Bias Review Board with cross-partisan oversight

- Allowing users to toggle between interpretive filters in AI chat interfaces

It’s unclear how much of the bill will pass intact, but the surrounding debate is sure to shape AI policy for years to come.

🧠 FAQs: The “Prevent Woke AI” Initiative and AI Bias

1. What is the “Prevent Woke AI” initiative?

It’s a federal policy proposal designed to ensure AI systems do not promote ideological bias—particularly perceived progressive or “woke” values. It calls for audits, transparency, and regulation of AI outputs used in consumer or public systems.

2. Why do some believe AI is “woke”?

Critics argue that many AI models reflect the progressive leanings of their developers or training data sources. For example, AI might consistently support certain social justice narratives or avoid promoting conservative viewpoints.

3. Is AI inherently biased?

Yes, most AI systems reflect the data they’re trained on, which includes existing societal biases. Bias isn’t always political—it can also be racial, gendered, geographic, or economic.

4. What’s wrong with trying to remove bias from AI?

Nothing, in principle. The controversy lies in who decides what counts as bias, and whether attempts to “balance” models could compromise accuracy, fairness, or free expression.

5. How does the initiative propose to fix bias?

The bill calls for transparency in training data, mandatory ideological audits, customizable AI filters, and a national oversight board to monitor compliance.