In 2025, America faces a rapidly evolving threat to truth: AI-powered disinformation. Deepfakes, voice clones, synthetic news anchors, and hyper-personalized fake content have ushered in what lawmakers are calling “Fake News 2.0”—a new era where reality itself can be engineered at scale.

With the 2026 midterm elections approaching and foreign influence growing, Congress has established a number of high-profile hearings and bipartisan task teams to address the explosive rise of AI-generated disinformation.operations continue to escalate.

With the stakes higher than ever, policymakers, tech CEOs, and cybersecurity experts are now racing to define the limits of free expression, the responsibilities of tech platforms, and the legal boundaries of synthetic content.

⚠️ The Problem: Disinformation Goes High-Tech

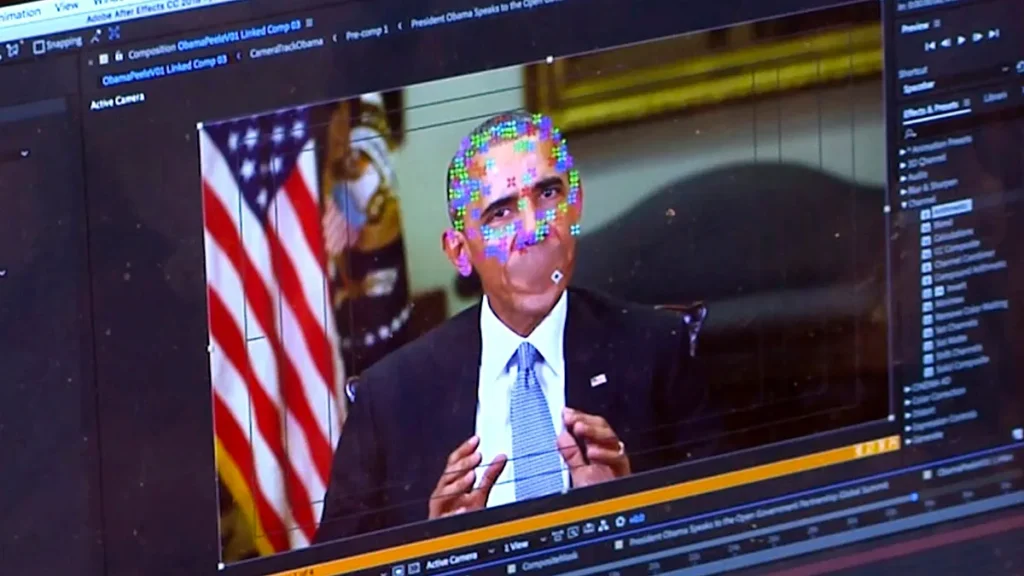

Unlike previous misinformation campaigns that relied on poor grammar and misleading memes, Fake News 2.0 leverages advanced AI models to generate convincing lies. Tools like generative adversarial networks (GANs), large language models (LLMs), and synthetic voice software can:

- Create fake interviews with public figures

- Fabricate news articles with citations

- Mimic real-time breaking news broadcasts using AI avatars

- Produce hyper-personalized propaganda tailored to individuals’ browsing habits

This level of manipulation can distort public opinion, sway elections, incite violence, and erode trust in institutions, journalism, and democracy itself.

🔍 Recent AI Disinformation Incidents

In the past year alone, the U.S. has witnessed several chilling examples of synthetic disinformation:

- A viral deepfake video showed a presidential candidate endorsing extremist views. It was shared 17 million times before being debunked.

- Fake audio clips of Supreme Court justices “leaking” opinions circulated on encrypted forums.

- Foreign actors used AI-generated articles to pose as American journalists, publishing false stories on niche websites.

- An AI-powered chatbot impersonated a government agency and spread fake evacuation orders during a wildfire crisis.

These incidents prompted the Department of Homeland Security and the Federal Election Commission to issue urgent warnings about the weaponization of AI ahead of future national events.

🏛️ Congress Responds: Hearings, Bills, and Regulations

In response, Congress has introduced several initiatives aimed at mitigating the spread of AI-generated falsehoods:

1. The AI Transparency & Labeling Act

This bipartisan bill would require:

- All AI-generated media to carry mandatory watermarks or disclaimers

- Tech companies to report mass synthetic content distribution

- Developers of AI tools to build-in traceability mechanisms

2. The Digital Authenticity Standards Commission

A proposed federal body to:

- Set technical guidelines for content authentication

- Work with international allies to develop global verification standards

- Advise social media platforms and content hosts on AI abuse detection

3. Modifications to Section 230 of the Communications Decency Act

There are growing calls to limit liability protection for platforms that knowingly allow unchecked AI disinformation to proliferate, especially if it incites harm or misleads voters.

🖥️ Tech Platforms in the Hot Seat

Major tech CEOs from Meta, X (formerly Twitter), YouTube, and emerging generative AI startups were recently grilled by Congress.

Some have introduced voluntary controls:

- “AI-generated” tags on videos and posts

- Improved deepfake detection algorithms

- Collaboration with fact-checking organizations

But critics argue these efforts are reactive, inconsistent, and insufficient. Platforms face pressure to balance free speech with proactive moderation—a tension that grows more complex with each AI advancement.

🌐 Foreign Influence and Election Security

U.S. intelligence agencies have confirmed that Russia, China, and Iran are actively experimenting with AI disinformation to:

- Undermine public confidence in elections

- Amplify domestic unrest

- Discredit political leaders

- Spread conspiracy theories through microtargeted campaigns

With the 2026 elections approaching, the U.S. Cybersecurity and Infrastructure Security Agency (CISA) has launched AI Threat Labs to monitor and intercept synthetic election interference.

🧠 The Psychological Cost: “Truth Fatigue”

Experts warn that even when AI disinformation is identified and debunked, it still undermines trust. This phenomenon—dubbed “truth fatigue”—leaves users exhausted, unsure what to believe, and vulnerable to disengagement.

“The more sophisticated the lie, the more doubt it sows,” says Dr. Lena Ashcroft, a cognitive scientist. “We’re not just fighting for truth—we’re fighting to keep people caring about the truth.”

🧩 Tools Emerging to Fight Back

To counter the rise of synthetic disinformation, developers and researchers are working on:

- Content provenance tools, like cryptographic signatures on authentic media

- Browser plug-ins that flag likely deepfakes in real time

- AI vs. AI countermeasures, where detection models are trained to spot disinformation from other models

- Public awareness campaigns on how to spot AI-generated manipulation

Some universities have begun teaching “digital literacy for the AI age,” helping students identify manipulated content and understand algorithmic influence.

🔮 Looking Ahead: Can We Preserve Trust?

Congress’s challenge is not just legal—it’s existential. In a world where “seeing is no longer believing,” the fabric of democracy could fray without clear standards, responsible innovation, and public resilience.

Ultimately, the future of truth may depend on a mix of:

- Smart regulation

- Transparent technology

- Educated citizens

- Cross-sector collaboration

But one thing is clear: Fake News 2.0 has arrived—and it won’t be going away quietly.

FAQs: AI-Powered Disinformation & Congress’s Response

1. What is Fake News 2.0?

Fake News 2.0 refers to AI-generated disinformation that mimics real voices, faces, articles, and news broadcasts. It’s far more convincing and scalable than traditional misinformation.

2. How is Congress responding to AI disinformation?

Congress is debating several new bills, including:

- The AI Transparency & Labeling Act

- Creation of a federal Digital Authenticity Standards Commission

- Revisions to Section 230 of the Communications Decency Act

3. Are platforms required to label AI content now?

Not yet federally mandated, but many platforms like YouTube, Meta, and TikTok are voluntarily tagging AI-generated content. Congress hopes to make labeling legally required soon.

4. How can I tell if something online is AI-generated?

Look for:

- Unnatural blinking or facial movement in videos

- Inconsistent lighting or voice tone

- No credible source attribution

- “AI-generated” tags (if available)

Browser extensions and reverse image searches can also help.

5. Are foreign governments using AI to target Americans?

Yes. Intelligence agencies have confirmed that countries like Russia, China, and Iran are experimenting with AI disinformation tools to influence U.S. public opinion and elections

In 2025, America faces a rapidly evolving threat to truth: AI-powered disinformation. Deepfakes, voice clones, synthetic news anchors, and hyper-personalized fake content have ushered in what lawmakers are calling “Fake News 2.0”—a new era where reality itself can be engineered at scale.

With the 2026 midterm elections approaching and foreign influence growing, Congress has established a number of high-profile hearings and bipartisan task teams to address the explosive rise of AI-generated disinformation.operations continue to escalate.

With the stakes higher than ever, policymakers, tech CEOs, and cybersecurity experts are now racing to define the limits of free expression, the responsibilities of tech platforms, and the legal boundaries of synthetic content.

⚠️ The Problem: Disinformation Goes High-Tech

Unlike previous misinformation campaigns that relied on poor grammar and misleading memes, Fake News 2.0 leverages advanced AI models to generate convincing lies. Tools like generative adversarial networks (GANs), large language models (LLMs), and synthetic voice software can:

- Create fake interviews with public figures

- Fabricate news articles with citations

- Mimic real-time breaking news broadcasts using AI avatars

- Produce hyper-personalized propaganda tailored to individuals’ browsing habits

This level of manipulation can distort public opinion, sway elections, incite violence, and erode trust in institutions, journalism, and democracy itself.

🔍 Recent AI Disinformation Incidents

In the past year alone, the U.S. has witnessed several chilling examples of synthetic disinformation:

- A viral deepfake video showed a presidential candidate endorsing extremist views. It was shared 17 million times before being debunked.

- Fake audio clips of Supreme Court justices “leaking” opinions circulated on encrypted forums.

- Foreign actors used AI-generated articles to pose as American journalists, publishing false stories on niche websites.

- An AI-powered chatbot impersonated a government agency and spread fake evacuation orders during a wildfire crisis.

These incidents prompted the Department of Homeland Security and the Federal Election Commission to issue urgent warnings about the weaponization of AI ahead of future national events.

🏛️ Congress Responds: Hearings, Bills, and Regulations

In response, Congress has introduced several initiatives aimed at mitigating the spread of AI-generated falsehoods:

1. The AI Transparency & Labeling Act

This bipartisan bill would require:

- All AI-generated media to carry mandatory watermarks or disclaimers

- Tech companies to report mass synthetic content distribution

- Developers of AI tools to build-in traceability mechanisms

2. The Digital Authenticity Standards Commission

A proposed federal body to:

- Set technical guidelines for content authentication

- Work with international allies to develop global verification standards

- Advise social media platforms and content hosts on AI abuse detection

3. Modifications to Section 230 of the Communications Decency Act

There are growing calls to limit liability protection for platforms that knowingly allow unchecked AI disinformation to proliferate, especially if it incites harm or misleads voters.

🖥️ Tech Platforms in the Hot Seat

Major tech CEOs from Meta, X (formerly Twitter), YouTube, and emerging generative AI startups were recently grilled by Congress.

Some have introduced voluntary controls:

- “AI-generated” tags on videos and posts

- Improved deepfake detection algorithms

- Collaboration with fact-checking organizations

But critics argue these efforts are reactive, inconsistent, and insufficient. Platforms face pressure to balance free speech with proactive moderation—a tension that grows more complex with each AI advancement.

🌐 Foreign Influence and Election Security

U.S. intelligence agencies have confirmed that Russia, China, and Iran are actively experimenting with AI disinformation to:

- Undermine public confidence in elections

- Amplify domestic unrest

- Discredit political leaders

- Spread conspiracy theories through microtargeted campaigns

With the 2026 elections approaching, the U.S. Cybersecurity and Infrastructure Security Agency (CISA) has launched AI Threat Labs to monitor and intercept synthetic election interference.

🧠 The Psychological Cost: “Truth Fatigue”

Experts warn that even when AI disinformation is identified and debunked, it still undermines trust. This phenomenon—dubbed “truth fatigue”—leaves users exhausted, unsure what to believe, and vulnerable to disengagement.

“The more sophisticated the lie, the more doubt it sows,” says Dr. Lena Ashcroft, a cognitive scientist. “We’re not just fighting for truth—we’re fighting to keep people caring about the truth.”

🧩 Tools Emerging to Fight Back

To counter the rise of synthetic disinformation, developers and researchers are working on:

- Content provenance tools, like cryptographic signatures on authentic media

- Browser plug-ins that flag likely deepfakes in real time

- AI vs. AI countermeasures, where detection models are trained to spot disinformation from other models

- Public awareness campaigns on how to spot AI-generated manipulation

Some universities have begun teaching “digital literacy for the AI age,” helping students identify manipulated content and understand algorithmic influence.

🔮 Looking Ahead: Can We Preserve Trust?

Congress’s challenge is not just legal—it’s existential. In a world where “seeing is no longer believing,” the fabric of democracy could fray without clear standards, responsible innovation, and public resilience.

Ultimately, the future of truth may depend on a mix of:

- Smart regulation

- Transparent technology

- Educated citizens

- Cross-sector collaboration

But one thing is clear: Fake News 2.0 has arrived—and it won’t be going away quietly.

FAQs: AI-Powered Disinformation & Congress’s Response

1. What is Fake News 2.0?

Fake News 2.0 refers to AI-generated disinformation that mimics real voices, faces, articles, and news broadcasts. It’s far more convincing and scalable than traditional misinformation.

2. How is Congress responding to AI disinformation?

Congress is debating several new bills, including:

- The AI Transparency & Labeling Act

- Creation of a federal Digital Authenticity Standards Commission

- Revisions to Section 230 of the Communications Decency Act

3. Are platforms required to label AI content now?

Not yet federally mandated, but many platforms like YouTube, Meta, and TikTok are voluntarily tagging AI-generated content. Congress hopes to make labeling legally required soon.

4. How can I tell if something online is AI-generated?

Look for:

- Unnatural blinking or facial movement in videos

- Inconsistent lighting or voice tone

- No credible source attribution

- “AI-generated” tags (if available)

Browser extensions and reverse image searches can also help.

5. Are foreign governments using AI to target Americans?

Yes. Intelligence agencies have confirmed that countries like Russia, China, and Iran are experimenting with AI disinformation tools to influence U.S. public opinion and elections