Artificial Intelligence (AI) has revolutionized many industries—but in the realm of politics, it’s becoming a double-edged sword. As the 2024 U.S. election cycle heats up, AI-generated deepfakes, disinformation, and synthetic content are creating unprecedented challenges for candidates, voters, and regulators alike.

The line between truth and fiction is blurring fast, with deepfake videos and AI-manipulated audio posing a serious threat to the integrity of democratic elections. While political campaigns experiment with AI tools for outreach and efficiency, critics warn that misuse could undermine public trust and destabilize the democratic process.

The Rise of Deepfakes in Political Campaigns

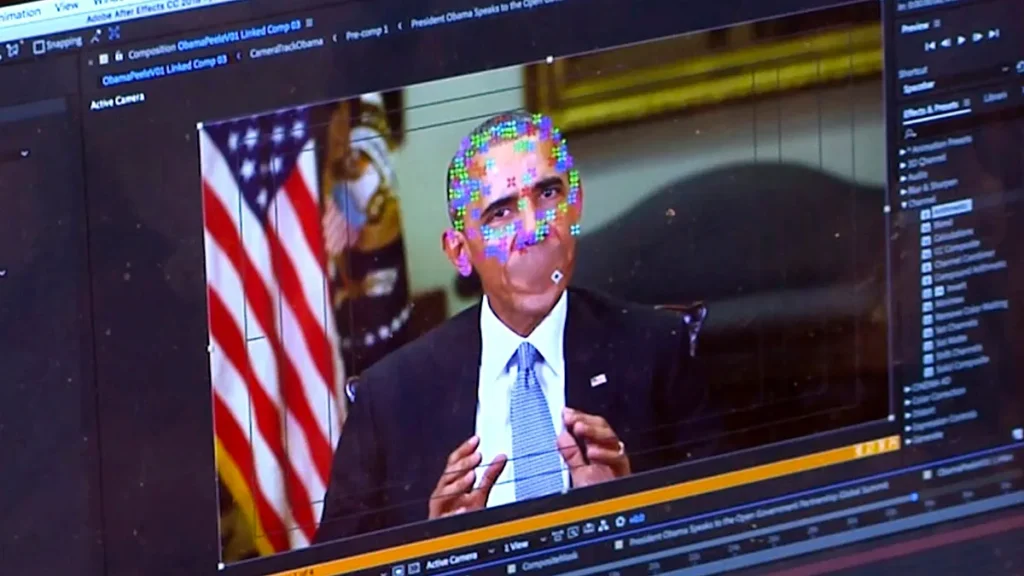

A deepfake is artificial intelligence (AI)-generated synthetic material that accurately mimics a person’s voice, look, or behavior. Though originally used for entertainment or satire, deepfakes have entered the political mainstream—with alarming consequences.

In early 2024, deepfake robocalls mimicking President Joe Biden’s voice told New Hampshire voters to skip the Democratic primary. These AI-generated calls were traced to operatives using voice cloning tools—raising red flags about election manipulation.

Just weeks later, fake videos appeared online showing presidential candidates saying inflammatory statements they never made. While some viewers quickly recognized the content as fake, others didn’t—fueling outrage and confusion.

Why This Matters for U.S. Democracy

AI-powered deepfakes are particularly dangerous because of their realism. Unlike Photoshop or meme-based disinformation, synthetic media can appear entirely authentic—tricking even educated voters and journalists.

Here’s how this technology is disrupting political norms:

1. Misinformation and Voter Confusion

Fake videos and audio recordings can spread rapidly across social media platforms. If a candidate is falsely portrayed making racist, sexist, or unpatriotic remarks, voters may form opinions before the content is debunked.

2. Erosion of Trust in Real Footage

Even authentic recordings are now viewed with skepticism. Politicians caught on tape making controversial remarks can now claim, “It’s a deepfake,” even when it’s real—leading to a dangerous “liar’s dividend.”

3. Last-Minute Election Sabotage

Bad actors can release deepfakes right before Election Day, too late for fact-checkers or authorities to respond. This “October surprise” tactic could change the outcome of tight races.

4. International Interference

Experts warn that foreign governments could deploy AI-generated propaganda to influence U.S. elections. The same tactics used in 2016 to sow division through social media can now be supercharged with AI tools.

How Campaigns Are Using AI—For Good and Bad

Not all AI use in politics is nefarious. Campaigns on both sides of the aisle are exploring AI for tasks like:

- Generating personalized emails or fundraising messages

- Analyzing voter behavior and preferences

- Creating virtual assistants or chatbots for campaign websites

- Automating video production for campaign ads

But as with any powerful tool, misuse is a major concern. Without oversight, AI can create hyper-targeted disinformation, manipulate public opinion, or even suppress voter turnout.

The Regulatory Response: Too Little, Too Late?

The Federal Election Commission (FEC) and several state legislatures have begun proposing guidelines on the use of AI in political campaigning. Key initiatives include:

- Labeling Requirements: Mandating clear disclosure when AI-generated content is used in campaign ads.

- Bans on Deepfake Deception: Proposals to outlaw intentionally misleading synthetic media close to elections.

- Increased Transparency: Requiring campaigns to disclose AI tools and platforms used in content creation publicly.

However, critics argue that legislation is lagging far behind the technology. Deepfake tools are widely accessible, and bad actors can operate anonymously or offshore. Enforcement remains a major challenge.

Tech Companies Under Pressure

Big Tech platforms like Meta (Facebook), YouTube, and X (formerly Twitter) are also facing scrutiny. While some platforms have introduced detection tools and content labels, critics say their responses are inconsistent and reactive.

AI detection remains imperfect. Platforms must balance free speech with election security—a delicate and controversial line to walk.

What Can Voters Do?

In this new AI-driven political landscape, media literacy is more important than ever. Voters should:

- Be skeptical of viral content—especially if it’s shocking or emotionally charged.

- Check multiple sources before accepting controversial quotes or videos as true.

- Use reputable fact-checking websites like PolitiFact or Snopes.

- Follow official campaign channels for accurate statements and rebuttals.

Looking Ahead

The 2024 election will be a major test of America’s ability to handle AI’s impact on democracy. While the technology offers benefits in efficiency and outreach, its potential for deception is profound.

Without fast, coordinated action from lawmakers, tech companies, campaigns, and voters, the backlash from deepfake politics could undermine trust in the entire electoral system.

FAQs

1. What is a political deepfake?

A political deepfake is synthetic media—such as video or audio—created with AI to falsely depict a politician saying or doing something they never did. These are often used to mislead voters or discredit candidates.

2. How are deepfakes different from traditional misinformation?

Traditional misinformation often includes misquotes, out-of-context statements, or edited images. Deepfakes, on the other hand, use AI to create highly realistic but completely fake media that can be much harder to detect.

3. Has the government taken steps to stop deepfake misuse?

Yes, the FEC and some state governments have introduced or proposed laws requiring disclosure of AI-generated content and banning deceptive deepfakes close to elections. However, enforcement is still limited.

4. How can voters identify a deepfake?

Signs include odd facial movements, mismatched lip-syncing, or inconsistent lighting. Use reverse image searches or AI detection tools online. But as technology improves, many deepfakes may look flawless—making skepticism essential.

5. Are political campaigns allowed to use AI tools?

Yes, campaigns can use AI for legitimate tasks like email automation, voter analysis, and ad generation. However, using AI to intentionally mislead voters or create fake content could face legal and ethical scrutiny.

6. Can AI be used positively in politics?

Absolutely. AI can enhance outreach, improve campaign targeting, and even engage voters in more interactive ways. The key is transparency and responsible use.

7. What role do social media platforms play in fighting deepfakes?

Platforms like Facebook, YouTube, and X are under pressure to detect and remove harmful synthetic content. Some use AI to flag suspected deepfakes, but critics say more consistent enforcement is needed.

Conclusion

The intersection of AI and politics is redefining the U.S. electoral landscape. While AI can be a tool for progress, deepfakes and synthetic content are serious threats that must be addressed head-on. As technology accelerates, so too must our systems of accountability, transparency, and civic education.